Rust

For many years running, Rust has been the top-ranked most loved language despite having relatively small adoption. "Most loved" may seem subjective (which it is) for us engineer types, but does that matter? Are there competitive advantages?

Rust is still a hacker language. It's not in widespread university curricula (yet--this is a lagging indicator). There are few job postings with it as a requirement. Its learning curve is steep (being a systems programming language). So, why make the leap?

This reminds me exactly of The Python Paradox in the early 2000s. There was no good reason for me to invest in learning in Python at the time: most projects were built on Java, C++, PHP, and scripting was dominated by Perl. You could say that it wasn't a smart investment in me. But the truth is I learned Python because I thought the world could do better. And so did the Python community.

Rust also has the same passionate and highly motivated user base. As I build out engineering teams, this core value of excellence matters more than anything I see in a resume or in a coding challenge. If you are investing in Rust, you are an innovator and have the drive to take programming to the next level.

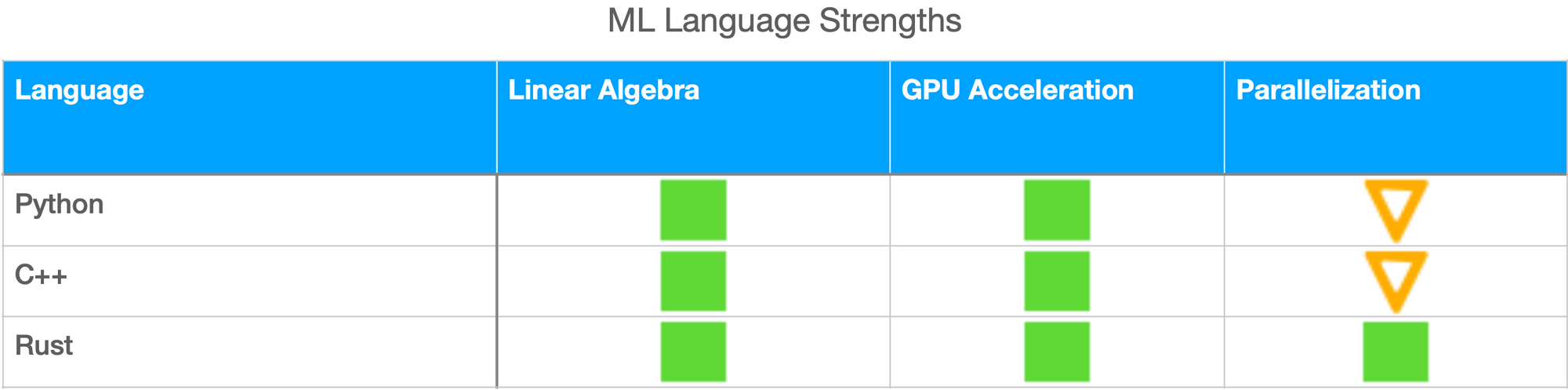

ML Language Features

Let's consider the key language features for machine learning and compare them across Python (preferred for prototyping), C++ (the performance incumbent), and Rust.

Linear Algebra

At the heart of machine learning is linear algebra, with the key programming feature being a combination of operator overloading and linear algebra libraries. All three languages hold ground here. Python and C++ see heavy use amongst scientists for this reason.

GPU Acceleration

Running performant ML at scale relies on the natural ability of GPUs to scale out linear algebra operations. Again, all three languages offer solid support.

Parallelization

ML products are pushing both GPUs and CPUs to their limits, and so first-class parallelization support is key.

In Python it's easy to run code in parallel, but that ease-of-use debt is paid with performance degradation by the infamous Global Interpreter Lock (GIL).

While C++11 introduced actual threading into the language, it still takes extreme dedication to write thread-safe code in complex systems. Larger teams can hire up for this, but for startups and fast-moving teams, this can be a hindrance.

This is where Rust starts to differentiate itself by being very aggressive with compile-time parallelization checks with features such as lifetimes and send/sync. The learning curve for this is steep, but the pot of performance gold at the end of the rainbow awaits.

PyTorch with Rust

With those key language features covered, we still need ecosystems support from established ML frameworks in Rust. Luckily much work has been done already here.

The performant version of PyTorch is libtorch which we can access with Rust through tch-rs.

PyTorch with Rust and tch-rs

We can compare Rust and Python with a simple example that illustrates the use of the Tensor object and a wee bit of linear algebra.

This example is taken from tch-rs/examples/basics.rs:

use tch::{kind, Tensor};

fn grad_example() {

let mut x = Tensor::from(2.0).set_requires_grad(true);

let y = &x * &x + &x + 36;

println!("{}", y.double_value(&[]));

x.zero_grad();

y.backward();

let dy_over_dx = x.grad();

println!("{}", dy_over_dx.double_value(&[]));

}

fn main() {

tch::maybe_init_cuda();

let t = Tensor::of_slice(&[3, 1, 4, 1, 5]);

t.print();

let t = Tensor::randn(&[5, 4], kind::FLOAT_CPU);

t.print();

(&t + 1.5).print();

(&t + 2.5).print();

let mut t = Tensor::of_slice(&[1.1f32, 2.1, 3.1]);

t += 42;

t.print();

println!("{:?} {}", t.size(), t.double_value(&[1]));

grad_example();

println!("Cuda available: {}", tch::Cuda::is_available());

println!("Cudnn available: {}", tch::Cuda::cudnn_is_available());

}

Python equivalent:

import torch

def grad_example():

x = torch.tensor(2., requires_grad=True)

y = x * x + x + 36

print('{}'.format(y.double()))

if x.grad: x.grad.zero_()

y.backward()

dy_over_dx = x.grad

print('{}'.format(dy_over_dx.double()))

t = torch.tensor([3, 1, 4, 1, 5])

print('{}'.format(t))

t = torch.randn([5, 4], device=torch.device('cpu'))

print('{}'.format(t))

print('{}'.format(t + 1.5))

print('{}'.format(t + 2.5))

t = torch.tensor([1.1, 2.1, 3.1])

t += 42

print('{}'.format(t))

print('{} {}'.format(t.size(), t[1].double()))

grad_example()

print('cuda available: {}'.format(torch.cuda.is_available()))

print('cudnn available: {}'.format(torch.backends.cudnn.is_available()))

We are nearly 1:1 with lines of code here with the main differences coming from the type-safe Rust constructors of Tensor. We can also verify that GPU acceleration features are accessible from Rust.

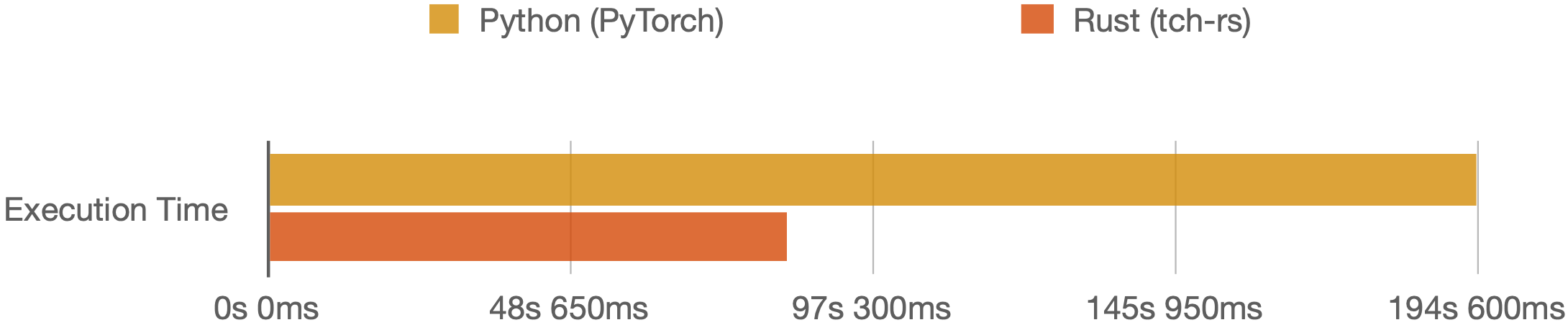

Performance

Let's first measure the (CPU, non-CUDA) performance of training a handwriting detection model in both languages. (Sample data can be found here, performance measured on 2.4 GHz 8-Core Intel Core i9, 32 GB 2667 MHz DDR4).

time cargo run --release --example vae

Finished release [optimized] target(s) in 0.09s

Running `target/release/examples/vae`

Epoch: 1, loss: 165.18017956334302

Epoch: 2, loss: 121.97916288457365

#...

Epoch: 19, loss: 104.23461658119136

Epoch: 20, loss: 104.10385677957127

cargo run --release --example vae 127.40s user 26.33s system 183% cpu 1:23.66 total

time python3 main.py --epochs=20

Train Epoch: 1 [0/60000 (0%)] Loss: 550.596191

Train Epoch: 1 [1280/60000 (2%)] Loss: 306.821533

# ...

Train Epoch: 20 [57600/60000 (96%)] Loss: 105.983673

Train Epoch: 20 [58880/60000 (98%)] Loss: 101.149338

====> Epoch: 20 Average loss: 103.9320

====> Test set loss: 103.9573

python3 main.py --epochs=20 240.30s user 31.12s system 139% cpu 3:14.57 total

The native performance of Rust number crunching really comes through on this test.

Cons

The question of whether these benefits outweigh the Rust learning curve will differ based on your project constraints. If you have a deep bench of C++ talent and tools, you may want to stick with that.

The other downside is that established ML frameworks are likely going to be Rust wrappers of C++ for the foreseeable future which makes debugging more of a chore along with digging out the right documentation. Some of the wrapper APIs won't be fully idiomatic Rust either.

At the end of the day, being able to prototype in Python is still going to be preferable in the earlier stages of a project. The Python ecosystem is still more extensive and will help validate your approach sooner rather than later.

Summary

Where Rust can give you leverage is when both performance and productivity are essential, giving you an edge over C++ in some cases.

Given the meteoric rise in Rust's popularity, the fact that it's being considered for Linux kernel development, and the growing adoption in the ML space, Tempus Ex is excited about building real-time systems with Rust--including in the ML domain. See our careers page for Rust and other opportunities!

Special thanks to Jason Yosinski for reading drafts of this.